What is ChatGPT Retrieval Plugin

The ChatGPT Retrieval Plugin is a plugin that enables semantic search and retrieval of personal or organizational documents. Semantic search refers to the concept of understanding the intent and purpose of the search user and providing the most relevant results the user is seeking. Users can retrieve the most relevant chunks of documents stored in a Vector DB, which can include files, notes, emails, and other data sources, by asking questions or expressing their needs in natural language. By using this plugin, it is possible to return responses that reference internal documents through ChatGPT.

How does ChatGPT Retrieval Plugin work

The ChatGPT Retrieval Plugin works as follows:

- It uses OpenAI's

text-embedding-ada-002embedding model to generate embeddings for document chunks. - It stores the embeddings in a Vector DB.

- It executes queries against the Vector DB.

Supported Vector DBs

The ChatGPT Retrieval Plugin supports various Vector DB providers, each with different features and performance characteristics. Depending on the provider you choose, you may need to use different Dockerfiles and set different environment variables.

The following are the Vector DBs supported by the ChatGPT Retrieval Plugin:

- Pinecone

- Weaviate

- Zilliz

- Milvus

- Qdrant

- Redis

- LlamaIndex

- Chroma

- Azure Cognitive Search

- Supabase

- Postgres

- AnalyticDB

It is also possible to use a Vector DB that is not supported by default by editing the source code.

Setup

To set up and run the ChatGPT Retrieval Plugin, follow the steps below:

-

Install Python 3.10 if not already installed.

-

Clone the repository.

$ git clone https://github.com/openai/chatgpt-retrieval-plugin.git

- Navigate to the cloned repository directory.

$ cd /path/to/chatgpt-retrieval-plugin

- Install Poetry.

$ pip install poetry

- Create a new virtual environment using Python 3.10.

$ poetry env use python3.10

- Activate the virtual environment.

$ poetry shell

- Install the dependencies.

$ poetry install

- Create a Bearer token.

- Set the required environment variables.

DATASTORE, BEARER_TOKEN, and OPENAI_API_KEY are mandatory environment variables. Set the environment variables for the chosen Vector DB provider and any other required variables.

| Name | Required | Description |

|---|---|---|

DATASTORE |

Yes | Specifies the Vector DB provider. You can choose chroma, pinecone, weaviate, zilliz, milvus, qdrant, redis, azuresearch, supabase, postgres, or analyticdb for storing and querying embeddings. |

BEARER_TOKEN |

Yes | A secret token required for authenticating requests to the API. You can generate it using a tool or method of your choice, such as jwt.io. |

OPENAI_API_KEY |

Yes | The OpenAI API key required for generating embeddings using the text-embedding-ada-002 model. You can obtain an API key by creating an account on OpenAI. |

$ export DATASTORE=<your_datastore>

$ export BEARER_TOKEN=<your_bearer_token>

$ export OPENAI_API_KEY=<your_openai_api_key>

# Optional environment variables used when running Azure OpenAI

$ export OPENAI_API_BASE=https://<AzureOpenAIName>.openai.azure.com/

$ export OPENAI_API_TYPE=azure

$ export OPENAI_EMBEDDINGMODEL_DEPLOYMENTID=<Name of text-embedding-ada-002 model deployment>

$ export OPENAI_METADATA_EXTRACTIONMODEL_DEPLOYMENTID=<Name of deployment of model for metatdata>

$ export OPENAI_COMPLETIONMODEL_DEPLOYMENTID=<Name of general model deployment used for completion>

$ export OPENAI_EMBEDDING_BATCH_SIZE=<Batch size of embedding, for AzureOAI, this value need to be set as 1>

# Add the environment variables for your chosen vector DB.

# Some of these are optional; read the provider's setup docs in /docs/providers for more information.

# Pinecone

$ export PINECONE_API_KEY=<your_pinecone_api_key>

$ export PINECONE_ENVIRONMENT=<your_pinecone_environment>

$ export PINECONE_INDEX=<your_pinecone_index>

# Weaviate

$ export WEAVIATE_URL=<your_weaviate_instance_url>

$ export WEAVIATE_API_KEY=<your_api_key_for_WCS>

$ export WEAVIATE_CLASS=<your_optional_weaviate_class>

# Zilliz

$ export ZILLIZ_COLLECTION=<your_zilliz_collection>

$ export ZILLIZ_URI=<your_zilliz_uri>

$ export ZILLIZ_USER=<your_zilliz_username>

$ export ZILLIZ_PASSWORD=<your_zilliz_password>

# Milvus

$ export MILVUS_COLLECTION=<your_milvus_collection>

$ export MILVUS_HOST=<your_milvus_host>

$ export MILVUS_PORT=<your_milvus_port>

$ export MILVUS_USER=<your_milvus_username>

$ export MILVUS_PASSWORD=<your_milvus_password>

# Qdrant

$ export QDRANT_URL=<your_qdrant_url>

$ export QDRANT_PORT=<your_qdrant_port>

$ export QDRANT_GRPC_PORT=<your_qdrant_grpc_port>

$ export QDRANT_API_KEY=<your_qdrant_api_key>

$ export QDRANT_COLLECTION=<your_qdrant_collection>

# AnalyticDB

$ export PG_HOST=<your_analyticdb_host>

$ export PG_PORT=<your_analyticdb_port>

$ export PG_USER=<your_analyticdb_username>

$ export PG_PASSWORD=<your_analyticdb_password>

$ export PG_DATABASE=<your_analyticdb_database>

$ export PG_COLLECTION=<your_analyticdb_collection>

# Redis

$ export REDIS_HOST=<your_redis_host>

$ export REDIS_PORT=<your_redis_port>

$ export REDIS_PASSWORD=<your_redis_password>

$ export REDIS_INDEX_NAME=<your_redis_index_name>

$ export REDIS_DOC_PREFIX=<your_redis_doc_prefix>

$ export REDIS_DISTANCE_METRIC=<your_redis_distance_metric>

$ export REDIS_INDEX_TYPE=<your_redis_index_type>

# Llama

$ export LLAMA_INDEX_TYPE=<gpt_vector_index_type>

$ export LLAMA_INDEX_JSON_PATH=<path_to_saved_index_json_file>

$ export LLAMA_QUERY_KWARGS_JSON_PATH=<path_to_saved_query_kwargs_json_file>

$ export LLAMA_RESPONSE_MODE=<response_mode_for_query>

# Chroma

$ export CHROMA_COLLECTION=<your_chroma_collection>

$ export CHROMA_IN_MEMORY=<true_or_false>

$ export CHROMA_PERSISTENCE_DIR=<your_chroma_persistence_directory>

$ export CHROMA_HOST=<your_chroma_host>

$ export CHROMA_PORT=<your_chroma_port>

# Azure Cognitive Search

$ export AZURESEARCH_SERVICE=<your_search_service_name>

$ export AZURESEARCH_INDEX=<your_search_index_name>

$ export AZURESEARCH_API_KEY=<your_api_key> (optional, uses key-free managed identity if not set)

# Supabase

$ export SUPABASE_URL=<supabase_project_url>

$ export SUPABASE_ANON_KEY=<supabase_project_api_anon_key>

# Postgres

$ export PG_HOST=<postgres_host>

$ export PG_PORT=<postgres_port>

$ export PG_USER=<postgres_user>

$ export PG_PASSWORD=<postgres_password>

$ export PG_DATABASE=<postgres_database>

- Run the API locally.

$ poetry run start

- Access the API documentation at

http://0.0.0.0:8000/docsto test the API endpoints (don't forget to add the Bearer token).

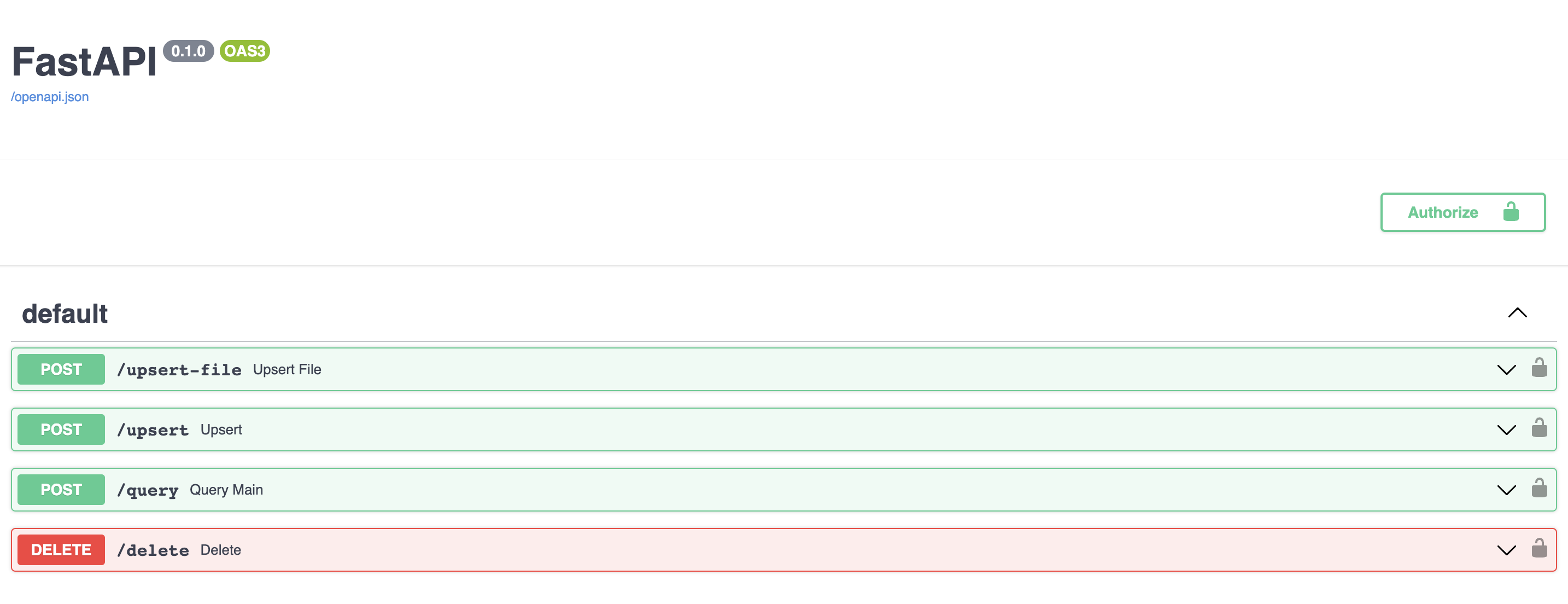

API Endpoints

The ChatGPT Retrieval Plugin is built using FastAPI, a web framework for building APIs with Python. FastAPI allows for easy development, validation, and documentation of API endpoints.

The plugin exposes the following endpoints for upserting, querying, and deleting documents from the vector database. All requests and responses are in JSON format, and require a valid bearer token as an authorization header.

-

/upsert

This endpoint allows uploading one or more documents and storing their text and metadata in the vector database. The documents are split into chunks of around 200 tokens, each with a unique ID. The endpoint expects a list of documents in the request body, each with atextfield, and optionalidandmetadatafields. Themetadatafield can contain the following optional subfields:source,source_id,url,created_at, andauthor. The endpoint returns a list of the IDs of the inserted documents (an ID is generated if not initially provided). -

/upsert-file

This endpoint allows uploading a single file (PDF, TXT, DOCX, PPTX, or MD) and storing its text and metadata in the vector database. The file is converted to plain text and split into chunks of around 200 tokens, each with a unique ID. The endpoint returns a list containing the generated id of the inserted file. -

/query

This endpoint allows querying the vector database using one or more natural language queries and optional metadata filters. The endpoint expects a list of queries in the request body, each with aqueryand optionalfilterandtop_kfields. Thefilterfield should contain a subset of the following subfields:source,source_id,document_id,url,created_at, andauthor. Thetop_kfield specifies how many results to return for a given query, and the default value is 3. The endpoint returns a list of objects that each contain a list of the most relevant document chunks for the given query, along with their text, metadata and similarity scores. -

/delete

This endpoint allows deleting one or more documents from the vector database using their IDs, a metadatafilter, or adelete_allflag. The endpoint expects at least one of the following parameters in the request body:ids,filter, ordelete_all. Theidsparameter should be a list of document IDs to delete; all document chunks for the document with these IDS will be deleted. Thefilterparameter should contain a subset of the following subfields:source,source_id,document_id,url,created_at, andauthor. Thedelete_allparameter should be a boolean indicating whether to delete all documents from the vector database. The endpoint returns a boolean indicating whether the deletion was successful.

References