Hugging Face Transformers

Hugging Face Transformers adalah perpustakaan sumber terbuka yang menyediakan model Transformer yang telah dilatih sebelumnya yang digunakan untuk tugas-tugas NLP. Para pengembang dan peneliti dapat menggunakan model-model ini untuk melatih ulang model mereka sendiri untuk tugas-tugas mereka. Mereka juga dapat mengembangkan model baru berdasarkan model-model ini.

Hugging Face Transformers kompatibel dengan kerangka kerja pembelajaran mendalam terkemuka seperti TensorFlow dan PyTorch.

Instal

Hugging Face Transformers dapat dipasang dengan cara berikut:

- Instal dengan pip

- Instal dari sumber GitHub

Instal dengan pip

$ pip install transformers

Instal dari sumber GitHub

$ git clone https://github.com/huggingface/transformers

$ cd transformers

$ pip install .

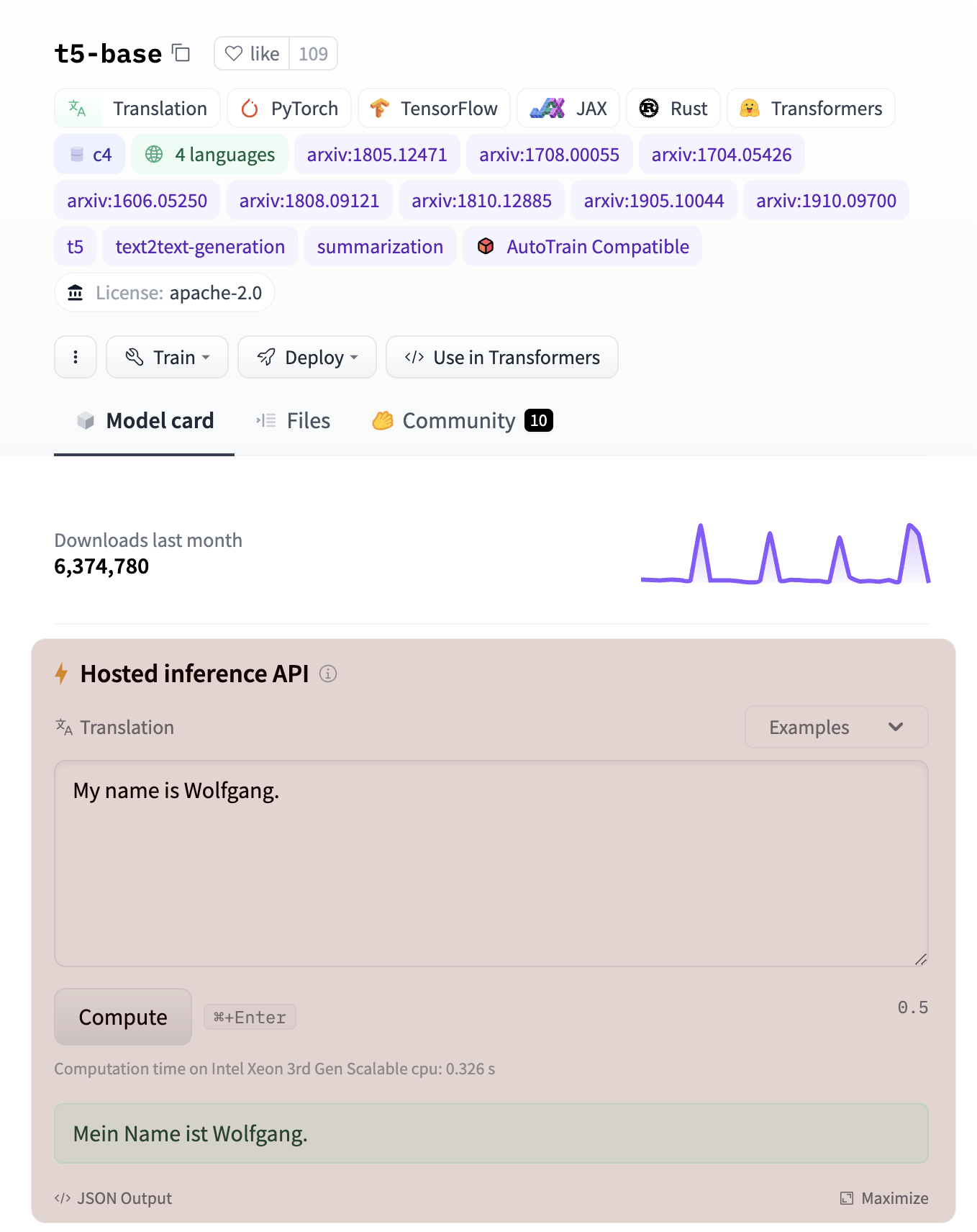

Demo online

Anda dapat mencoba API inferensi.

Contoh kode

Repositori GitHub berikut ini menyediakan contoh kode untuk mengimplementasikan Transformer.

Arsitektur model

Model yang didukung oleh Hugging Face Transformers dapat ditemukan di tautan berikut ini.

Anda juga dapat memeriksa kerangka kerja yang didukung oleh Hugging Face Tranformers di tautan berikut.

Model yang telah dilatih sebelumnya

Model pra-terlatih yang disediakan oleh Huggingface Transformers dapat ditemukan di tautan berikut ini.

Model lainnya disediakan oleh komunitas.

Tugas-tugas NLP

Tugas-tugas NLP yang tersedia di Hugging Face Transformers adalah sebagai berikut.

| Tugas | Isi |

|---|---|

| Text Classification | Tugas untuk menetapkan label atau kelas ke teks |

| Question Answering | Tugas yang mengembalikan jawaban atas pertanyaan |

| Language Modeling | Tugas untuk memprediksi kata-kata dalam kalimat |

| Text Generation | Tugas untuk menghasilkan teks |

| Named Entity Recognition | Tugas yang mengidentifikasi entitas tertentu dalam teks, seperti tanggal, individu, lokasi, dll. |

| Summarization | Tugas untuk membuat dokumen singkat yang merangkum konten dari dokumen tertentu |

| Translation | Tugas untuk mengonversi sekumpulan teks dari satu bahasa ke bahasa lain |

Hugging Face Transformers memungkinkan inferensi dalam dua cara:

- Pipeline

- Menyediakan model abstraksi yang dapat diimplementasikan dalam dua baris

- Tokenizer

- Menyediakan inferensi lengkap dengan memanipulasi model secara langsung

Tugas-tugas yang tersedia di Pipeline adalah sebagai berikut.

| Tugas | Isi |

|---|---|

| feature-extraction | Diberikan sebuah teks, kembalikan sebuah vektor fitur |

| sentiment-analysis | Diberikan sebuah teks, kembalikan hasil analisis sentimen |

| question-answering | Berikan pertanyaan dan artikel dan dapatkan jawabannya kembali |

| fill-mask | Diberikan teks dengan bagian yang kosong, kembalikan kata-kata yang sesuai dengan bagian yang kosong |

| text-generation | Diberikan sebuah teks, kembalikan teks yang mengikuti |

| ner | Diberikan sebuah teks, kembalikan hasil Pengenalan Entitas Bernama |

| summarization | Meringkas dan mengembalikan teks masukan |

| translation_ |

Menerjemahkan dan mengembalikan teks masukan |

| zero-shot-classification | Mengembalikan hasil inferensi untuk label yang ingin Anda klasifikasikan tanpa harus menyiapkan teks berlabel |

Text Classification

Berikut ini adalah contoh klasifikasi teks dengan Pipeline.

from transformers import pipeline

classifier = pipeline("sentiment-analysis")

print(classifier("I love you"))

print(classifier("I don't love you"))

print(classifier("I hate you"))

print(classifier("I don't hate you"))

[{'label': 'POSITIVE', 'score': 0.9998656511306763}]

[{'label': 'NEGATIVE', 'score': 0.9943438768386841}]

[{'label': 'NEGATIVE', 'score': 0.9991129040718079}]

[{'label': 'POSITIVE', 'score': 0.9985570311546326}]

Berikut ini adalah contoh klasifikasi teks dengan Tokenizer.

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch

tokenizer = AutoTokenizer.from_pretrained("bert-base-cased-finetuned-mrpc")

model = AutoModelForSequenceClassification.from_pretrained("bert-base-cased-finetuned-mrpc")

classes = ["not paraphrase", "is paraphrase"]

sequence_0 = "The company HuggingFace is based in New York City"

sequence_1 = "Apples are especially bad for your health"

sequence_2 = "HuggingFace's headquarters are situated in Manhattan"

# The tokenizer will automatically add any model specific separators (i.e. <CLS> and <SEP>) and tokens to

# the sequence, as well as compute the attention masks.

paraphrase = tokenizer(sequence_0, sequence_2, return_tensors="pt")

not_paraphrase = tokenizer(sequence_0, sequence_1, return_tensors="pt")

paraphrase_classification_logits = model(**paraphrase).logits

not_paraphrase_classification_logits = model(**not_paraphrase).logits

paraphrase_results = torch.softmax(paraphrase_classification_logits, dim=1).tolist()[0]

not_paraphrase_results = torch.softmax(not_paraphrase_classification_logits, dim=1).tolist()[0]

# Should be paraphrase

for i in range(len(classes)):

print(f"{classes[i]}: {int(round(paraphrase_results[i] * 100))}%")

>> not paraphrase: 10%

>> is paraphrase: 90%

# Should not be paraphrase

for i in range(len(classes)):

print(f"{classes[i]}: {int(round(not_paraphrase_results[i] * 100))}%")

>> not paraphrase: 94%

>> is paraphrase: 6%

Question Answering

Berikut ini adalah contoh jawaban pertanyaan dengan Pipeline.

from transformers import pipeline

question_answerer = pipeline("question-answering")

context = r"""

Extractive Question Answering is the task of extracting an answer from a text given a question. An example of a

question answering dataset is the SQuAD dataset, which is entirely based on that task. If you would like to fine-tune

a model on a SQuAD task, you may leverage the `run_squad.py`.

"""

print(question_answerer(question="What is extractive question answering?", context=context))

print(question_answerer(question="What is a good example of a question answering dataset?", context=context))

{'score': 0.6222441792488098, 'start': 34, 'end': 95, 'answer': 'the task of extracting an answer from a text given a question'}

{'score': 0.511530339717865, 'start': 147, 'end': 160, 'answer': 'SQuAD dataset'}

Berikut ini adalah contoh jawaban pertanyaan dengan Tokenizer.

from transformers import AutoTokenizer, AutoModelForQuestionAnswering

import torch

tokenizer = AutoTokenizer.from_pretrained("bert-large-uncased-whole-word-masking-finetuned-squad")

model = AutoModelForQuestionAnswering.from_pretrained("bert-large-uncased-whole-word-masking-finetuned-squad")

text = r"""

🤗 Transformers (formerly known as pytorch-transformers and pytorch-pretrained-bert) provides general-purpose

architectures (BERT, GPT-2, RoBERTa, XLM, DistilBert, XLNet…) for Natural Language Understanding (NLU) and Natural

Language Generation (NLG) with over 32+ pretrained models in 100+ languages and deep interoperability between

TensorFlow 2.0 and PyTorch.

"""

questions = [

"How many pretrained models are available in 🤗 Transformers?",

"What does 🤗 Transformers provide?",

"🤗 Transformers provides interoperability between which frameworks?",

]

for question in questions:

inputs = tokenizer(question, text, add_special_tokens=True, return_tensors="pt")

input_ids = inputs["input_ids"].tolist()[0]

outputs = model(**inputs)

answer_start_scores = outputs.start_logits

answer_end_scores = outputs.end_logits

# Get the most likely beginning of answer with the argmax of the score

answer_start = torch.argmax(answer_start_scores)

# Get the most likely end of answer with the argmax of the score

answer_end = torch.argmax(answer_end_scores) + 1

answer = tokenizer.convert_tokens_to_string(

tokenizer.convert_ids_to_tokens(input_ids[answer_start:answer_end])

)

print(f"Question: {question}")

print(f"Answer: {answer}\n")

Question: How many pretrained models are available in 🤗 Transformers?

Answer: over 32 +

Question: What does 🤗 Transformers provide?

Answer: general - purpose architectures

Question: 🤗 Transformers provides interoperability between which frameworks?

Answer: tensorflow 2. 0 and pytorch

Language Modeling

Ada dua jenis Language Modeling:

- Masked Language Modeling

- Memprediksi token bertopeng dalam suatu urutan

- Causal Language Modeling

- Memprediksi token berikutnya dalam urutan token

Masked Language Modeling

Berikut ini adalah contoh Masked Language Modeling dengan pipeline.

from transformers import pipeline

from pprint import pprint

unmasker = pipeline("fill-mask")

pprint(

unmasker(

f"HuggingFace is creating a {unmasker.tokenizer.mask_token} that the community uses to solve NLP tasks."

)

)

[{'score': 0.17927584052085876,

'sequence': 'HuggingFace is creating a tool that the community uses to solve '

'NLP tasks.',

'token': 3944,

'token_str': ' tool'},

{'score': 0.11349426209926605,

'sequence': 'HuggingFace is creating a framework that the community uses to '

'solve NLP tasks.',

'token': 7208,

'token_str': ' framework'},

{'score': 0.05243551358580589,

'sequence': 'HuggingFace is creating a library that the community uses to '

'solve NLP tasks.',

'token': 5560,

'token_str': ' library'},

{'score': 0.03493541106581688,

'sequence': 'HuggingFace is creating a database that the community uses to '

'solve NLP tasks.',

'token': 8503,

'token_str': ' database'},

{'score': 0.02860243059694767,

'sequence': 'HuggingFace is creating a prototype that the community uses to '

'solve NLP tasks.',

'token': 17715,

'token_str': ' prototype'}]

Berikut ini adalah contoh Masked Language Modelingg dengan Tokenizer.

from transformers import AutoModelForMaskedLM, AutoTokenizer

import torch

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-cased")

model = AutoModelForMaskedLM.from_pretrained("distilbert-base-cased")

sequence = (

"Distilled models are smaller than the models they mimic. Using them instead of the large "

f"versions would help {tokenizer.mask_token} our carbon footprint."

)

inputs = tokenizer(sequence, return_tensors="pt")

mask_token_index = torch.where(inputs["input_ids"] == tokenizer.mask_token_id)[1]

token_logits = model(**inputs).logits

mask_token_logits = token_logits[0, mask_token_index, :]

top_5_tokens = torch.topk(mask_token_logits, 5, dim=1).indices[0].tolist()

for token in top_5_tokens:

print(sequence.replace(tokenizer.mask_token, tokenizer.decode([token])))

Distilled models are smaller than the models they mimic. Using them instead of the large versions would help reduce our carbon footprint.

Distilled models are smaller than the models they mimic. Using them instead of the large versions would help increase our carbon footprint.

Distilled models are smaller than the models they mimic. Using them instead of the large versions would help decrease our carbon footprint.

Distilled models are smaller than the models they mimic. Using them instead of the large versions would help offset our carbon footprint.

Distilled models are smaller than the models they mimic. Using them instead of the large versions would help improve our carbon footprint.

Causal Language Modeling

Berikut ini adalah contoh Causal Language Modeling dengan Tokenizer.

from transformers import AutoModelForCausalLM, AutoTokenizer, top_k_top_p_filtering

import torch

from torch import nn

tokenizer = AutoTokenizer.from_pretrained("gpt2")

model = AutoModelForCausalLM.from_pretrained("gpt2")

sequence = f"Hugging Face is based in DUMBO, New York City, and"

inputs = tokenizer(sequence, return_tensors="pt")

input_ids = inputs["input_ids"]

# get logits of last hidden state

next_token_logits = model(**inputs).logits[:, -1, :]

# filter

filtered_next_token_logits = top_k_top_p_filtering(next_token_logits, top_k=50, top_p=1.0)

# sample

probs = nn.functional.softmax(filtered_next_token_logits, dim=-1)

next_token = torch.multinomial(probs, num_samples=1)

generated = torch.cat([input_ids, next_token], dim=-1)

resulting_string = tokenizer.decode(generated.tolist()[0])

print(resulting_string)

Hugging Face is based in DUMBO, New York City, and aims

Text Generation

Berikut ini adalah contoh pembuatan teks dengan Pipeline.

from transformers import pipeline

text_generator = pipeline("text-generation")

print(text_generator("As far as I am concerned, I will", max_length=50, do_sample=False))

[{'generated_text': 'As far as I am concerned, I will be the first to admit that I am not a fan of the idea of a "free market." I think that the idea of a free market is a bit of a stretch. I think that the idea'}]

Berikut ini adalah contoh pembuatan teks dengan Tokenizer.

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("xlnet-base-cased")

tokenizer = AutoTokenizer.from_pretrained("xlnet-base-cased")

# Padding text helps XLNet with short prompts - proposed by Aman Rusia in https://github.com/rusiaaman/XLNet-gen#methodology

PADDING_TEXT = """In 1991, the remains of Russian Tsar Nicholas II and his family

(except for Alexei and Maria) are discovered.

The voice of Nicholas's young son, Tsarevich Alexei Nikolaevich, narrates the

remainder of the story. 1883 Western Siberia,

a young Grigori Rasputin is asked by his father and a group of men to perform magic.

Rasputin has a vision and denounces one of the men as a horse thief. Although his

father initially slaps him for making such an accusation, Rasputin watches as the

man is chased outside and beaten. Twenty years later, Rasputin sees a vision of

the Virgin Mary, prompting him to become a priest. Rasputin quickly becomes famous,

with people, even a bishop, begging for his blessing. <eod> </s> <eos>"""

prompt = "Today the weather is really nice and I am planning on "

inputs = tokenizer(PADDING_TEXT + prompt, add_special_tokens=False, return_tensors="pt")["input_ids"]

prompt_length = len(tokenizer.decode(inputs[0]))

outputs = model.generate(inputs, max_length=250, do_sample=True, top_p=0.95, top_k=60)

generated = prompt + tokenizer.decode(outputs[0])[prompt_length + 1 :]

print(generated)

Today the weather is really nice and I am planning on walking through the valley for my blog. I will write that I feel in my presence and I will do my best to inspire you and help you read this blog. When I know I will be out of the house for a while, I will post photos with a new title and to go through my blog and blog to show you the progress I have made with this blog

Named Entity Recognition

Mari kita mengklasifikasikan token ke dalam 9 kelas berikut ini.

- O: di luar Pengakuan Entitas Bernama

- B-MIS: lainnya dimulai segera setelah lainnya

- I-MIS: lainnya

- B-PER: awal nama orang yang langsung mengikuti nama orang lain

- I-PER: Nama orang

- B-ORG: Awal organisasi segera setelah organisasi lain

- I-ORG: organisasi

- B-LOC: awal tempat segera setelah tempat lain

- I-LOC: Lokasi

Berikut ini adalah contoh Named Entity Recognition dengan Pipeline.

from transformers import pipeline

ner_pipe = pipeline("ner")

sequence = """Hugging Face Inc. is a company based in New York City. Its headquarters are in DUMBO,

therefore very close to the Manhattan Bridge which is visible from the window."""

for entity in ner_pipe(sequence):

print(entity)

{'entity': 'I-ORG', 'score': 0.99957865, 'index': 1, 'word': 'Hu', 'start': 0, 'end': 2}

{'entity': 'I-ORG', 'score': 0.9909764, 'index': 2, 'word': '##gging', 'start': 2, 'end': 7}

{'entity': 'I-ORG', 'score': 0.9982224, 'index': 3, 'word': 'Face', 'start': 8, 'end': 12}

{'entity': 'I-ORG', 'score': 0.9994879, 'index': 4, 'word': 'Inc', 'start': 13, 'end': 16}

{'entity': 'I-LOC', 'score': 0.9994344, 'index': 11, 'word': 'New', 'start': 40, 'end': 43}

{'entity': 'I-LOC', 'score': 0.99931955, 'index': 12, 'word': 'York', 'start': 44, 'end': 48}

{'entity': 'I-LOC', 'score': 0.9993794, 'index': 13, 'word': 'City', 'start': 49, 'end': 53}

{'entity': 'I-LOC', 'score': 0.98625827, 'index': 19, 'word': 'D', 'start': 79, 'end': 80}

{'entity': 'I-LOC', 'score': 0.95142686, 'index': 20, 'word': '##UM', 'start': 80, 'end': 82}

{'entity': 'I-LOC', 'score': 0.933659, 'index': 21, 'word': '##BO', 'start': 82, 'end': 84}

{'entity': 'I-LOC', 'score': 0.9761654, 'index': 28, 'word': 'Manhattan', 'start': 114, 'end': 123}

{'entity': 'I-LOC', 'score': 0.9914629, 'index': 29, 'word': 'Bridge', 'start': 124, 'end': 130}

Berikut ini adalah contoh Named Entity Recognition dengan Tokenizer.

from transformers import AutoModelForTokenClassification, AutoTokenizer

import torch

model = AutoModelForTokenClassification.from_pretrained("dbmdz/bert-large-cased-finetuned-conll03-english")

tokenizer = AutoTokenizer.from_pretrained("bert-base-cased")

sequence = (

"Hugging Face Inc. is a company based in New York City. Its headquarters are in DUMBO, "

"therefore very close to the Manhattan Bridge."

)

inputs = tokenizer(sequence, return_tensors="pt")

tokens = inputs.tokens()

outputs = model(**inputs).logits

predictions = torch.argmax(outputs, dim=2)

for token, prediction in zip(tokens, predictions[0].numpy()):

print((token, model.config.id2label[prediction]))

('[CLS]', 'O')

('Hu', 'I-ORG')

('##gging', 'I-ORG')

('Face', 'I-ORG')

('Inc', 'I-ORG')

('.', 'O')

('is', 'O')

('a', 'O')

('company', 'O')

('based', 'O')

('in', 'O')

('New', 'I-LOC')

('York', 'I-LOC')

('City', 'I-LOC')

('.', 'O')

('Its', 'O')

('headquarters', 'O')

('are', 'O')

('in', 'O')

('D', 'I-LOC')

('##UM', 'I-LOC')

('##BO', 'I-LOC')

(',', 'O')

('therefore', 'O')

('very', 'O')

('close', 'O')

('to', 'O')

('the', 'O')

('Manhattan', 'I-LOC')

('Bridge', 'I-LOC')

('.', 'O')

('[SEP]', 'O')

Summarization

Berikut ini adalah contoh peringkasan dengan Pipeline.

from transformers import pipeline

summarizer = pipeline("summarization")

ARTICLE = """ New York (CNN)When Liana Barrientos was 23 years old, she got married in Westchester County, New York.

A year later, she got married again in Westchester County, but to a different man and without divorcing her first husband.

Only 18 days after that marriage, she got hitched yet again. Then, Barrientos declared "I do" five more times, sometimes only within two weeks of each other.

In 2010, she married once more, this time in the Bronx. In an application for a marriage license, she stated it was her "first and only" marriage.

Barrientos, now 39, is facing two criminal counts of "offering a false instrument for filing in the first degree," referring to her false statements on the

2010 marriage license application, according to court documents.

Prosecutors said the marriages were part of an immigration scam.

On Friday, she pleaded not guilty at State Supreme Court in the Bronx, according to her attorney, Christopher Wright, who declined to comment further.

After leaving court, Barrientos was arrested and charged with theft of service and criminal trespass for allegedly sneaking into the New York subway through an emergency exit, said Detective

Annette Markowski, a police spokeswoman. In total, Barrientos has been married 10 times, with nine of her marriages occurring between 1999 and 2002.

All occurred either in Westchester County, Long Island, New Jersey or the Bronx. She is believed to still be married to four men, and at one time, she was married to eight men at once, prosecutors say.

Prosecutors said the immigration scam involved some of her husbands, who filed for permanent residence status shortly after the marriages.

Any divorces happened only after such filings were approved. It was unclear whether any of the men will be prosecuted.

The case was referred to the Bronx District Attorney\'s Office by Immigration and Customs Enforcement and the Department of Homeland Security\'s

Investigation Division. Seven of the men are from so-called "red-flagged" countries, including Egypt, Turkey, Georgia, Pakistan and Mali.

Her eighth husband, Rashid Rajput, was deported in 2006 to his native Pakistan after an investigation by the Joint Terrorism Task Force.

If convicted, Barrientos faces up to four years in prison. Her next court appearance is scheduled for May 18.

"""

print(summarizer(ARTICLE, max_length=130, min_length=30, do_sample=False))

[{'summary_text': ' Liana Barrientos, 39, is charged with two counts of "offering a false instrument for filing in the first degree" In total, she has been married 10 times, with nine of her marriages occurring between 1999 and 2002 . At one time, she was married to eight men at once, prosecutors say .'}]

Berikut ini adalah contoh peringkasan dengan Tokenizer.

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

model = AutoModelForSeq2SeqLM.from_pretrained("t5-base")

tokenizer = AutoTokenizer.from_pretrained("t5-base")

# T5 uses a max_length of 512 so we cut the article to 512 tokens.

inputs = tokenizer("summarize: " + ARTICLE, return_tensors="pt", max_length=512, truncation=True)

outputs = model.generate(

inputs["input_ids"], max_length=150, min_length=40, length_penalty=2.0, num_beams=4, early_stopping=True

)

print(tokenizer.decode(outputs[0]))

<pad> prosecutors say the marriages were part of an immigration scam. if convicted, barrientos faces two criminal counts of "offering a false instrument for filing in the first degree" she has been married 10 times, nine of them between 1999 and 2002.</s>

Translation

Berikut ini adalah contoh penggunaan Pipeline untuk menerjemahkan dari bahasa Inggris ke bahasa Jerman.

from transformers import pipeline

translator = pipeline("translation_en_to_de")

print(translator("Hugging Face is a technology company based in New York and Paris", max_length=40))

[{'translation_text': 'Hugging Face ist ein Technologieunternehmen mit Sitz in New York und Paris.'}]

Berikut ini adalah contoh penggunaan Tokenizer untuk menerjemahkan dari bahasa Inggris ke bahasa Jerman.

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

model = AutoModelForSeq2SeqLM.from_pretrained("t5-base")

tokenizer = AutoTokenizer.from_pretrained("t5-base")

inputs = tokenizer(

"translate English to German: Hugging Face is a technology company based in New York and Paris",

return_tensors="pt",

)

outputs = model.generate(inputs["input_ids"], max_length=40, num_beams=4, early_stopping=True)

print(tokenizer.decode(outputs[0]))

<pad> Hugging Face ist ein Technologieunternehmen mit Sitz in New York und Paris.</s>

Referensi