Introduction

In today's world of ever-increasing computational demands, it is essential to harness the power of parallelism to maximize the efficiency and performance of computer programs. Concurrency, the simultaneous execution of multiple tasks, plays a critical role in accomplishing this goal. There are two primary methods for achieving concurrency in software: multi-processing and multi-threading. Understanding the fundamental concepts and practical applications of these approaches is crucial for any software developer or computer scientist.

Multithreading vs. Multiprocessing in Python

Multi-Processing

Multi-processing involves executing concurrent tasks in separate processes, each running in its own memory space and operating system environment. This separation provides isolation, which prevents one process from affecting another in case of an error or crash. Additionally, multi-processing allows better utilization of multi-core processors, as each process can be scheduled to run on a separate core. However, multi-processing can be more resource-intensive and require complex synchronization and communication mechanisms between processes.

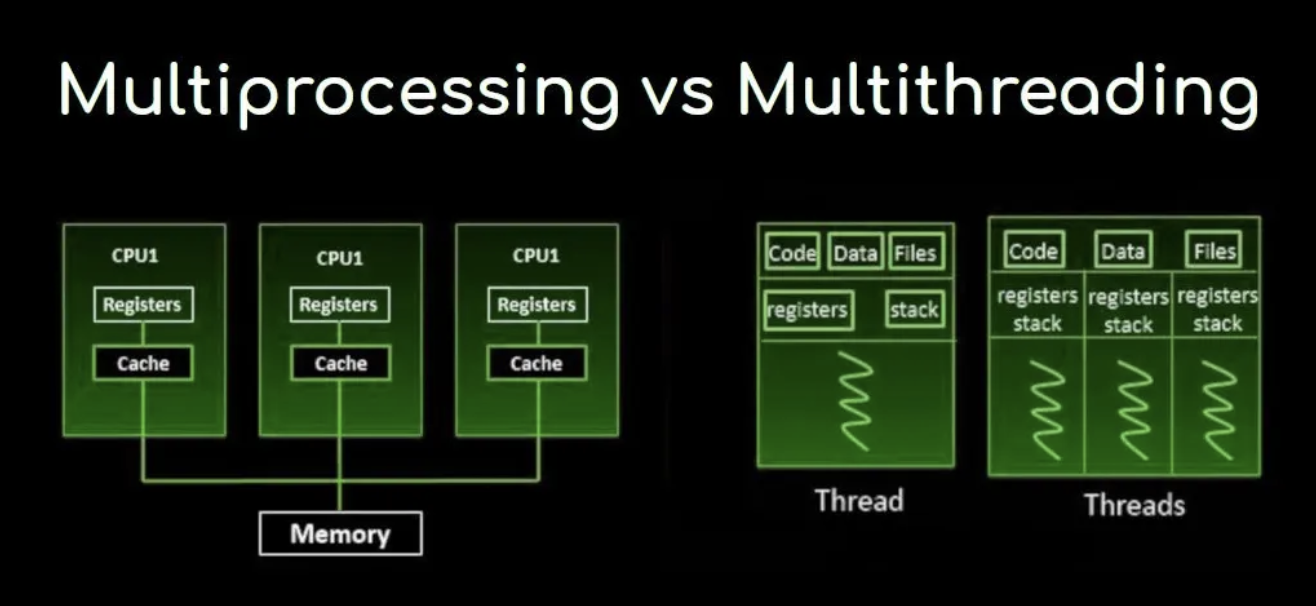

Process vs. Thread

A process is an independent program execution unit that has its own memory space, file handles, and other resources. A thread, on the other hand, is a lightweight execution unit within a process, sharing the same memory space and resources. Multi-processing involves running concurrent tasks in separate processes, while multi-threading uses multiple threads within the same process.

Process Creation and Management

Creating and managing processes is typically more resource-intensive than managing threads. Operating systems provide mechanisms to create, manage, and terminate processes. Each process has a unique process identifier (PID) and can spawn child processes using system calls like fork() or spawn().

Inter-Process Communication (IPC)

Processes run in isolated memory spaces, which means they cannot directly access each other's data. To share data or communicate, processes use IPC mechanisms such as pipes, message queues, shared memory, or sockets.

Synchronization Mechanisms for Processes

Processes may need to synchronize their actions to ensure proper execution of concurrent tasks. Common synchronization primitives for processes include semaphores, mutexes, and condition variables. These mechanisms help coordinate access to shared resources and prevent race conditions.

Multi-Threading

Multi-threading involves executing concurrent tasks within the same process using multiple threads. Threads share the same memory space as their parent process, which makes communication and data sharing between them more efficient. However, this shared memory model increases the risk of race conditions and other synchronization issues. Multi-threading is generally less resource-intensive compared to multi-processing, but it requires careful management of shared resources to avoid performance bottlenecks and potential deadlocks.

Thread Creation and Management

Threads are lightweight execution units within a process, sharing the same memory space and resources as their parent process. Creating and managing threads is generally less resource-intensive than managing processes. Operating systems and programming languages provide mechanisms to create, manage, and terminate threads, such as the pthread_create() function in C or the Thread class in Java.

Thread Synchronization Mechanisms

Threads within a process share memory, which can lead to race conditions and other synchronization issues if not properly managed. Common synchronization primitives for threads include mutexes, semaphores, condition variables, and barriers. These mechanisms help coordinate access to shared resources and ensure correct execution order.

Thread Pools and Executors

Manually managing threads can be complex and error-prone. Thread pools and executors provide higher-level abstractions for managing concurrency. A thread pool is a collection of pre-created threads that can execute tasks concurrently. Executors provide a mechanism for submitting tasks to a thread pool and managing their execution, such as the ThreadPoolExecutor class in Java or the concurrent.futures module in Python.

Concurrent Data Structures

When multiple threads access shared data structures, careful management of shared resources is crucial. Concurrent data structures are designed to be safely accessed by multiple threads without the need for explicit synchronization. Examples include the ConcurrentHashMap in Java or the concurrent.futures.ThreadPoolExecutor in Python. These data structures use internal synchronization mechanisms to ensure thread-safe operation.

Comparing Multi-Processing and Multi-Threading

In this section, I will examine the key differences between multi-processing and multi-threading, focusing on performance, scalability, resource management, and complexity.

Performance

Multi-processing can often provide better performance in CPU-bound tasks, as each process can be scheduled to run on a separate core, minimizing contention for processing resources. However, multi-threading can be more efficient for I/O-bound tasks, as threads within a process can more effectively share resources and communicate with each other.

Scalability

Multi-processing can scale better on systems with multiple cores or processors, as processes can be distributed across these resources. On the other hand, multi-threading can be more scalable in terms of memory usage, since threads share memory with their parent process, reducing the overall memory footprint.

Resource Management

Multi-processing tends to consume more system resources, such as memory and file descriptors, due to the overhead of creating and managing separate processes. Multi-threading, however, requires careful management of shared resources to avoid potential synchronization issues and contention for resources, which can degrade performance.

Complexity

Implementing multi-processing can be more complex than multi-threading, particularly when it comes to inter-process communication and synchronization. However, multi-threading presents its own challenges, such as managing shared resources and avoiding race conditions, deadlocks, and other synchronization issues.

Popular Programming Languages and Libraries for Concurrency

Different programming languages and libraries offer various levels of support for multi-processing and multi-threading. In this section, I will briefly discuss the concurrency features available in popular languages like Python, Java, C++, and Go.

-

Python

Python provides themultiprocessingmodule for multi-processing and thethreadingmodule for multi-threading. Additionally, theconcurrent.futuresmodule offers a high-level interface for asynchronously executing callables. -

Java

Java has built-in support for multi-threading through thejava.lang.Threadclass andjava.util.concurrent package, which includes various concurrency utilities, such as thread pools, synchronizers, and concurrent data structures. -

C++

C++11 introduced the<thread>header, which provides support for multi-threading. The C++ Standard Library also includes various synchronization primitives and concurrent data structures in the<mutex>,<condition_variable>, and<future>headers. -

Go

Go is designed with concurrency in mind, featuring lightweight goroutines and channels for communication between them. Thesyncpackage provides synchronization primitives, and thecontextpackage allows for cancellation and timeouts in concurrent operations.

Practical Applications of Multi-Processing and Multi-Threading

Concurrency is employed in various domains to improve performance and responsiveness. In this section, I will explore some practical applications where multi-processing and multi-threading are commonly used.

-

Web Servers

Web servers handle multiple requests simultaneously, often using multi-threading or multi-processing to distribute incoming requests across available resources, improving responsiveness and throughput. -

Scientific Computing

In scientific computing, parallelism is crucial for processing large datasets and performing complex calculations. Multi-processing and multi-threading can significantly reduce the execution time of computationally intensive tasks. -

Big Data Processing

Concurrency is essential for processing massive amounts of data in real-time. Big data frameworks, such as Apache Hadoop and Apache Spark, utilize multi-processing and multi-threading to efficiently distribute tasks across clusters of machines. -

Video Processing

Video processing tasks, such as encoding, decoding, and editing, can be computationally demanding. Multi-threading and multi-processing can be employed to parallelize these tasks and improve processing times.

References