What is Kubernetes

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Containers are lightweight, standalone, and executable software packages that include everything needed to run an application: code, runtime, libraries, and system tools. Kubernetes helps in managing these containers efficiently across a cluster of machines.

In modern software development, there is a need for rapid deployment, scaling, and management of applications. This has led to the rise of microservices – a design pattern where an application is composed of small, independent modules that communicate with each other.

Containers are a natural fit for microservices, as they provide isolated environments to run different services. However, managing containers at scale is a complex task. This is where Kubernetes comes into play.

By providing a platform to easily manage thousands of containers across multiple servers, Kubernetes has become an essential tool for modern DevOps and has enabled businesses to be more agile, flexible, and efficient in delivering applications and services.

Features of Kubernetes

Kubernetes comes with a rich set of features that enable the management, orchestration, deployment, and scaling of containerized applications.

-

Scalability

One of the primary advantages of Kubernetes is its ability to scale applications on the fly. With its Horizontal Pod Autoscaler, you can automatically scale the number of pods in a deployment or replica set based on observed CPU utilization or other select metrics. -

High Availability

Kubernetes ensures that your applications are available all the time and don’t suffer from downtime. It does this by making sure that the desired number of instances of your application is always running. In the event that a container goes down, Kubernetes will automatically create a new one in its place. -

Load Balancing

Kubernetes can distribute the traffic to your application across all of the containers that are running. This helps in evenly spreading the load and ensuring that no single container becomes a bottleneck. -

Storage Orchestration

With Kubernetes, you can automatically mount the storage system of your choice, be it local storage, a public cloud provider, or a network storage system. This is essential for stateful applications that need to store data. -

Automated Rollouts and Rollbacks

Kubernetes enables you to describe the desired state for your deployed containers, and it can change the actual state to the desired state at a controlled rate. For example, you can automate Kubernetes to create new containers for your deployment, remove existing containers, and adopt all their resources to the new container. -

Secrets and Configuration Management

Managing sensitive information (like passwords, OAuth tokens, and SSH keys) is a delicate process. Kubernetes provides Secrets as a mechanism for storing and managing sensitive information. ConfigMap allows you to decouple environment-specific configuration from your container images, which helps to keep applications portable. -

Self-Healing

Kubernetes has the ability to detect when a container is not responding as expected and can automatically replace and reschedule these containers on new nodes. It can also kill containers that don’t respond to user-defined health checks. -

Service Discovery

In a dynamic environment where containers are frequently created and destroyed, keeping track of different services becomes a challenge. Kubernetes has built-in service discovery features, which allow containers to know about each other and securely communicate with each other.

Comparison to Docker

At first glance, Kubernetes and Docker seem similar as both are related to deploying containers. However, they serve different purposes.

Docker focuses on the automation of operating-system-level virtualization, known as containerization. It’s primarily concerned with packaging an application and its dependencies into a single unit, called a container.

Kubernetes, on the other hand, is an orchestration platform for containers. It is used for automating the deployment, scaling, and management of containerized applications.

Basic Concepts of Kubernetes

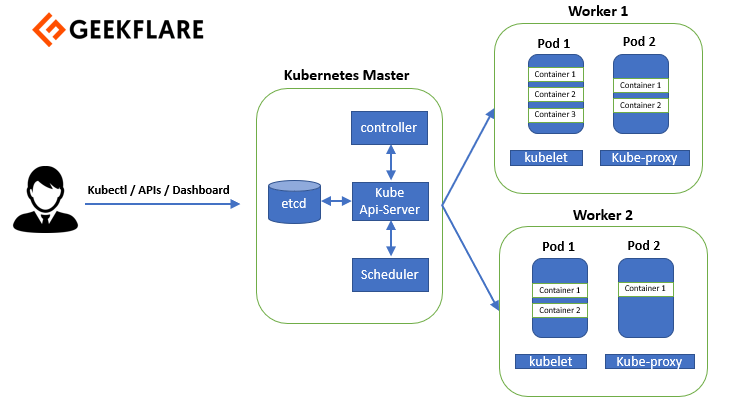

Kubernetes adopts a client-server architecture. Here, a Kubernetes environment comprises a control plane (or master), a distributed storage system to keep the cluster state consistent (etcd), and a set of nodes or worker machines.

Understanding Kubernetes Architecture

Control Plane

The Control Plane is responsible for managing the cluster. It maintains a record of all Kubernetes objects, runs continuous control loops to check the state of the cluster, and takes steps to ensure that the actual state matches the desired state defined by various objects. The Control Plane includes:

-

Kube-apiserver

This component serves the Kubernetes API using JSON over HTTP. It provides both the internal and external interfaces of Kubernetes. -

Etcd

A highly available key-value store that stores all cluster data, etcd is used as Kubernetes’ backing store for all cluster data. -

Kube-scheduler

It watches for newly created Pods with no assigned node and selects a node for them to run on based on a variety of factors such as resource requirements, hardware constraints, etc. -

Kube-controller-manager

This component runs controllers, which are control loops that respond to changes in cluster state. -

Cloud-controller-manager

This lets you link your cluster into your cloud provider’s API, and separates out the components that interact with that cloud platform from components that just interact with your cluster.

Nodes

Nodes are the worker machines where containers are deployed. Each node contains the services necessary to run Pods and is managed by the master components. The services on a node include:

-

Kubelet

An agent that runs on each node in the cluster and makes sure that containers are running in a Pod. -

Kube-proxy

This is a network proxy that runs on each node in your cluster, maintaining network rules and enabling communication to your Pods from network sessions inside or outside of your cluster. -

Container Runtime

This is the software used to run containers, and Kubernetes supports several runtimes: Docker, containerd, CRI-O, and any implementation of the Kubernetes CRI (Container Runtime Interface).

Pods

In Kubernetes, the smallest deployable units are Pods. A Pod represents a single instance of a running process in your cluster. Each Pod is an isolated container environment sharing network and storage resources.

Pods can contain single or multiple containers. Containers in the same Pod share an IP address, and can communicate with each other via localhost. They can also share volumes for data sharing and communication.

Services

While Pods come and go, Services are a stable interface to connect with workloads. A Kubernetes Service is an abstraction for exposing applications running on a set of Pods as a network service. With Kubernetes, you don’t need to modify your application to use an unfamiliar service discovery mechanism; Kubernetes gives Pods their IP addresses and a single DNS name for a set of Pods and can load-balance across them.

Types of Services include:

- ClusterIP

Exposes the Service on a cluster-internal IP, making the Service only reachable within the cluster. - NodePort

Exposes the Service on each Node’s IP at a static port. - LoadBalancer

Exposes the Service externally using a cloud provider’s load balancer. - ExternalName

Maps the Service to the contents of theexternalNamefield (e.g.,foo.bar.example.com).

Labels and Selectors

Labels are key/value pairs that can be attached to Kubernetes objects like Pods and Services to organize them based on user-defined attributes. Selectors are used in conjunction with labels to select a subset of objects based on their labels. For example, you might label Pods representing a database with app=database, and then use a selector with app=database to target these Pods for a Service.

Replication Controllers and ReplicaSets

Replication Controllers and ReplicaSets ensure that a specified number of replicas of a Pod are running at all times. This is essential for not only ensuring high availability but also for scaling applications by adjusting the number of running Pods.

Deployments

Deployments are higher-level concepts that manage ReplicaSets and provide declarative updates to Pods, along with other useful features. This ensures that the application is updated progressively without downtime and that it's always available even during the update process.

Namespaces

Namespaces are virtual clusters inside a physical Kubernetes cluster. They allow for the isolation of resources among different projects or user groups. For instance, you can have multiple Teams using the same physical cluster but working inside different Namespaces, ensuring that they don’t inadvertently interfere with each other.

ConfigMaps and Secrets

ConfigMaps allow you to decouple configuration artifacts from image content to keep containerized applications portable. ConfigMaps can be used to store configuration data in the form of key-value pairs that can be consumed by Pods.

Secrets are similar to ConfigMaps, but are used to store sensitive information like passwords, OAuth tokens, and ssh keys. Storing sensitive information in Secrets is more secure and flexible than putting it directly in the Pod definition or in the container image.

Volumes

In Kubernetes, a Volume represents a directory that can be accessed by containers in a Pod. Unlike the container file system, Volumes are resilient across container restarts. Kubernetes supports a variety of Volume types, such as:

- emptyDir

A simple empty directory used for storing non-persistent data. - hostPath

Used for mounting directories from the host node’s filesystem into the Pod. - nfs

Allows an existing NFS (Network File System) share to be mounted into the Pod. - persistentVolumeClaim

Used to mount a PersistentVolume into a Pod.

Persistent Volumes and Persistent Volume Claims

Persistent Volumes (PVs) provide a way to use storage resources independent of the Pod lifecycle. This ensures that data persists beyond the life of a Pod. Persistent Volume Claims (PVCs) are user requests for storage resources. They allow a user to request specific sizes, access modes, and other attributes of the storage resource.

Ingress

Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. It can provide load balancing, SSL termination, and host-based or path-based routing. Ingress objects require an Ingress controller to function, like Nginx or Traefik.

Resource Quotas and Limit Ranges

Resource Quotas provide constraints that limit resource consumption per Namespace. They can be used to limit the quantity of objects created in a Namespace, or to limit the total amount of compute resources that can be consumed by resources in that Namespace.

Limit Ranges are used to set minimum and maximum constraints on the amount of resources a single entity (e.g., Pod, Container, PVC) can consume.

References