What is Indexing API

The Indexing API is an API provided by Google that allows for the quick summoning of Google's crawler for pages that need to be indexed immediately, such as job postings or real-time events.

The Indexing API offers the following functionalities:

- Update URLs

Notifies Google about new URLs to be crawled or informs them about updated content for URLs previously submitted. - Remove URLs

Notifies Google after removing pages from the server, prompting Google to remove the corresponding pages from its index and avoid recrawling those URLs. - Get Request Status

Allows checking various notifications related to the specified URL that Google has received recently. - Send Batch Indexing Requests

Reduces the number of required HTTP connections for a site's client by combining up to 100 calls into a single HTTP request.

How to Use the Indexing API

In this article, I will introduce how to use the Indexing API in Google Colab.

Create a Google Cloud Project

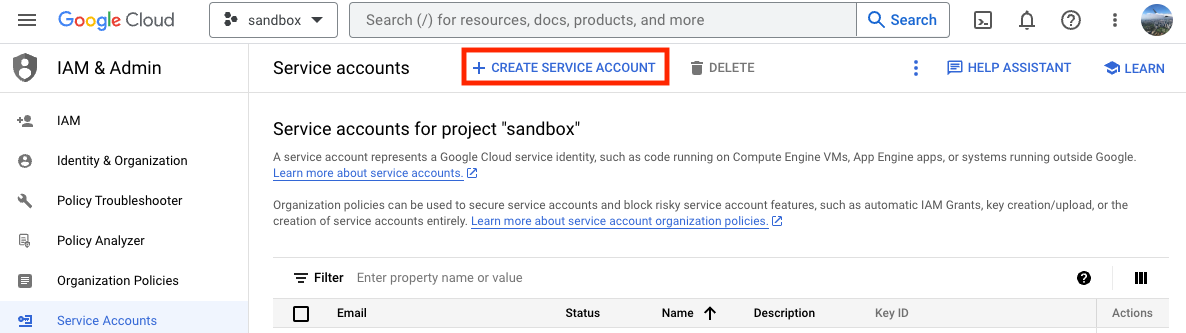

First, go to the Service Accounts page in the Google Cloud console and create or select a project. For this article, I have created and selected a project named sandbox.

Create a Service Account

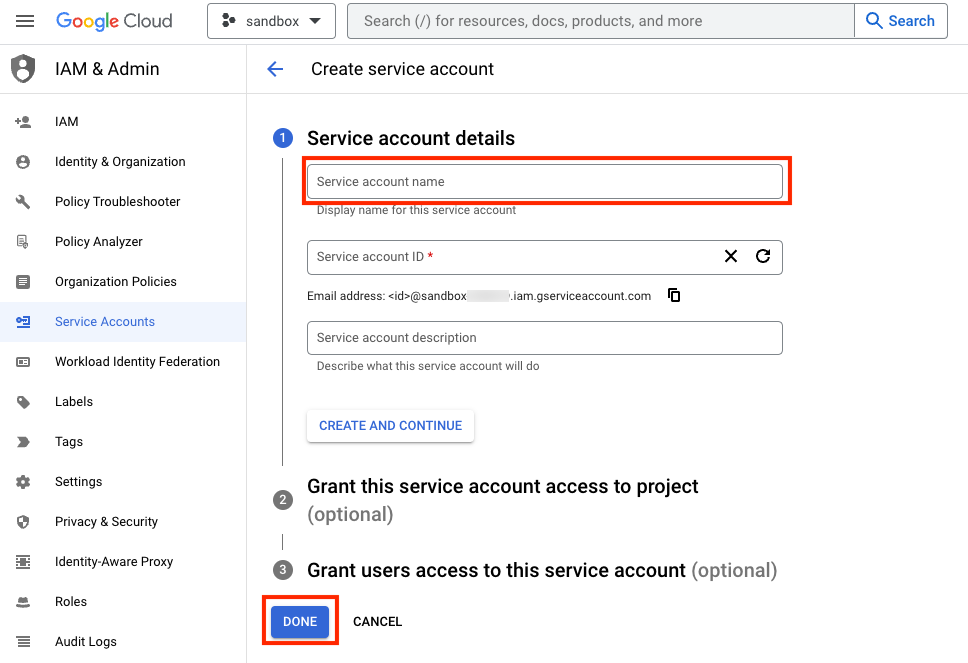

Click on "CREATE SERVICE ACCOUNT" to create a service account. In this article, I have created a service account with the name indexing-api. Enter the service name and click the "DONE" button.

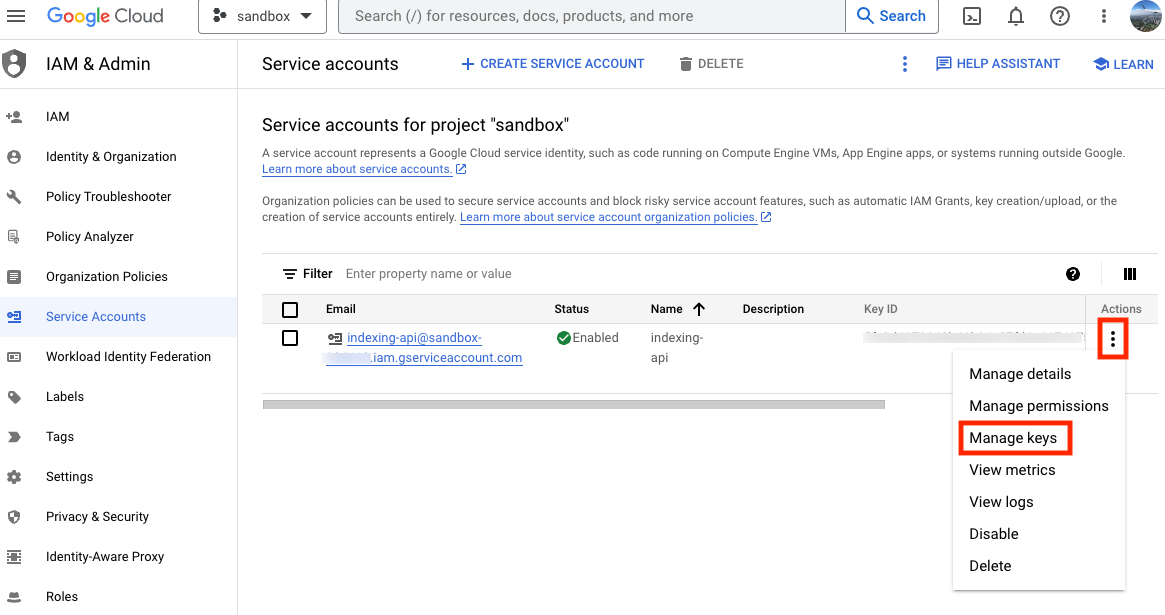

Select "Manage keys" for the added service account.

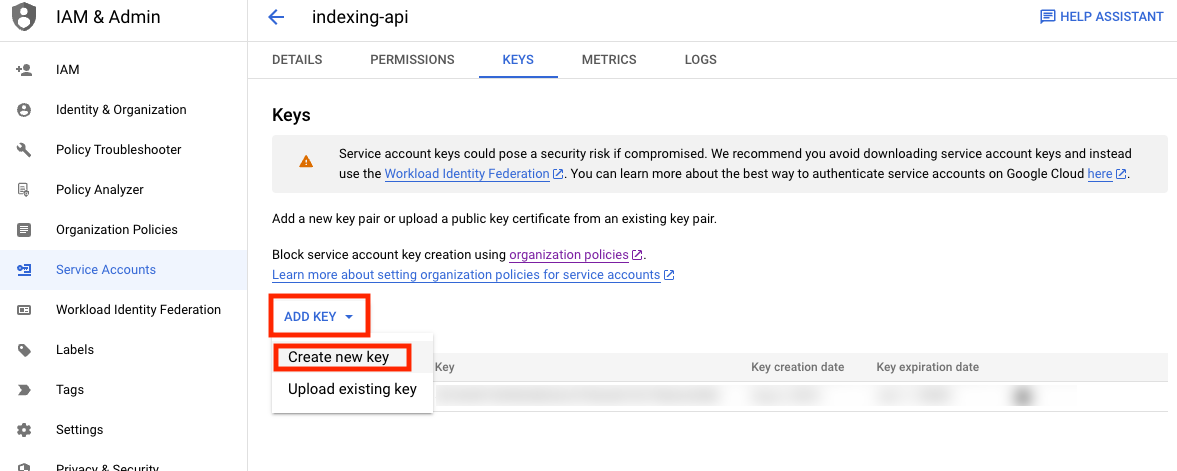

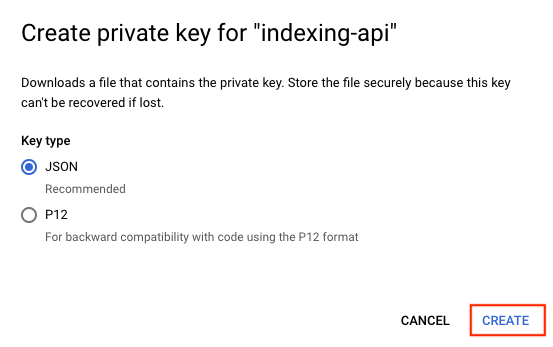

Click "Add KEY" > "Create new key." Choose the type of key as JSON and click the "CREATE" button. Once created, the key will be downloaded, so make sure to keep it safe.

Enable the Indexing API

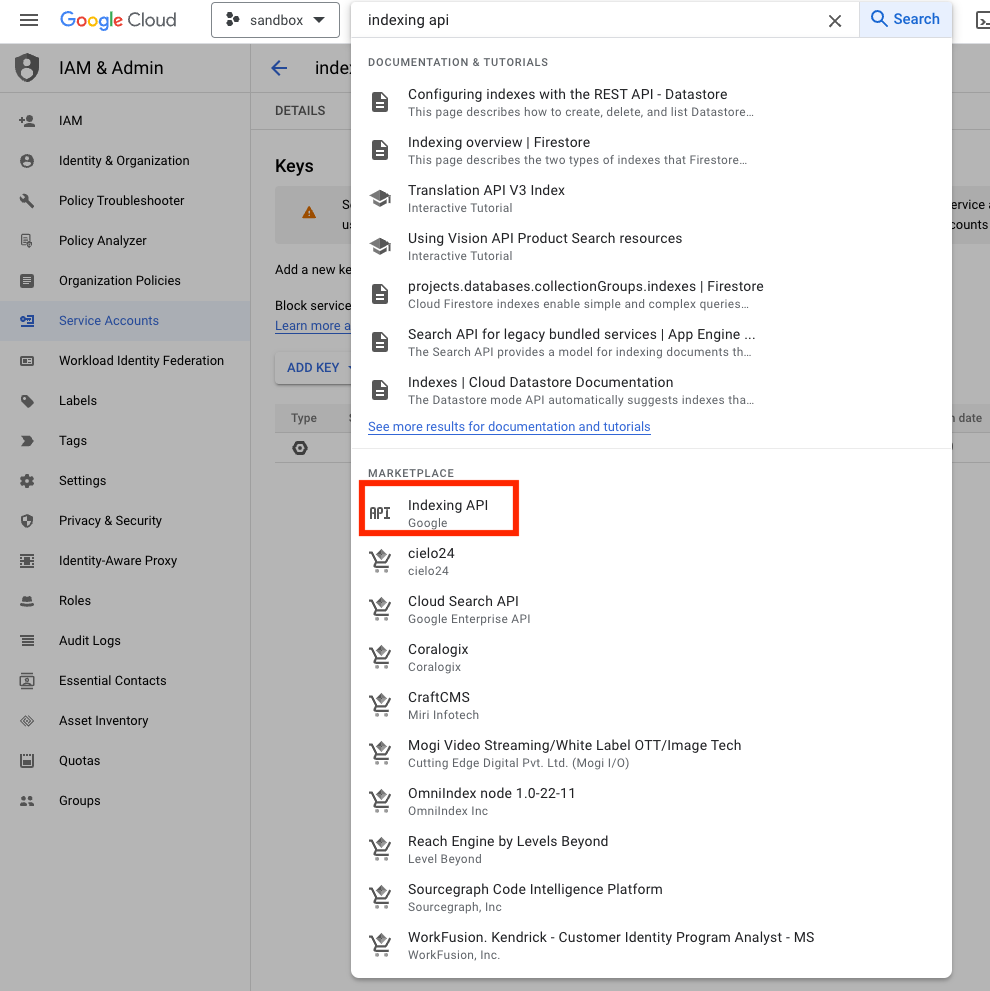

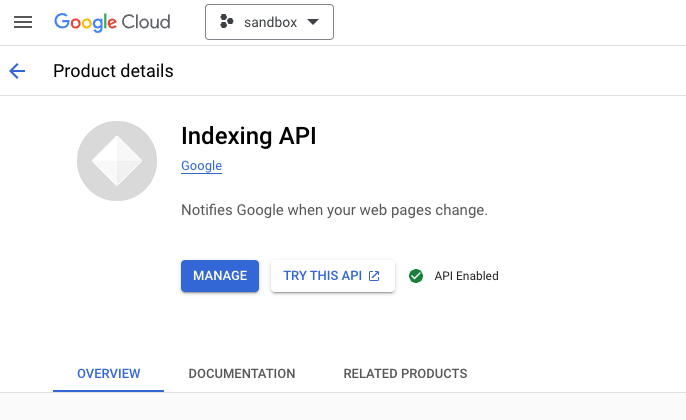

Enable the Indexing API by searching for "indexing api" in the search bar, selecting the Indexing API, and clicking on the "Enable" button. If it says "API Enabled," then it is successfully enabled.

Verify Ownership in Google Search Console

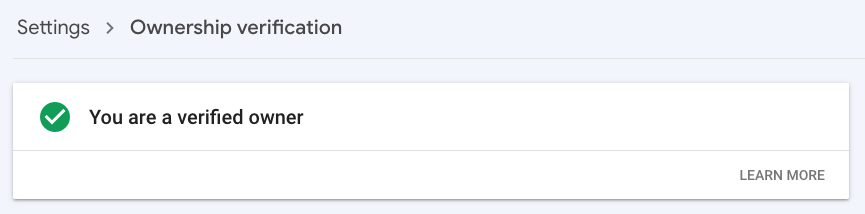

Verify ownership by going to the Ownership Verification page in Google Search Console.

Make sure "You are a verified owner" is displayed.

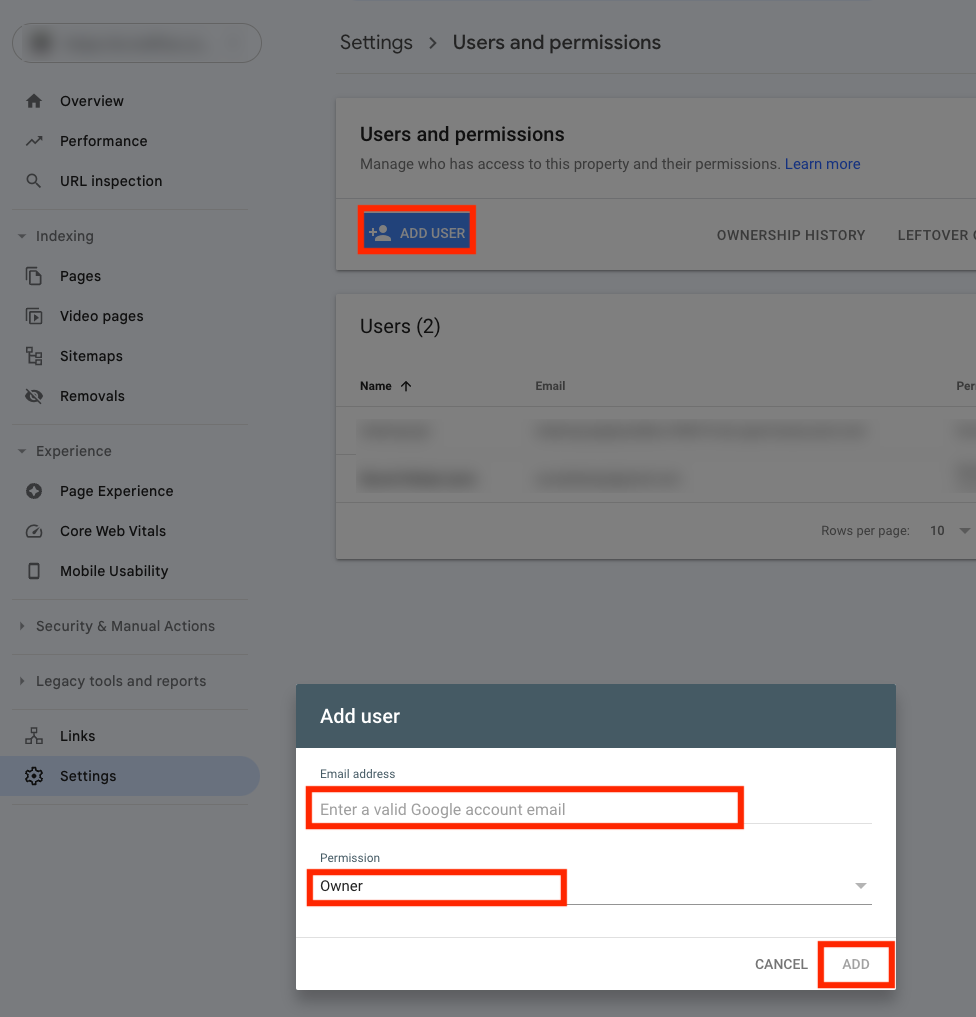

Add Owner in Google Search Console

Open the Permission Management page in Google Search Console, select the verified website, and add the address listed in the client_email of the JSON key for the service account created in Google Cloud as an owner.

Execute Indexing API in Google Colab

Open Google Colab, and upload the JSON key file for the service account.

Then, paste the following code:

from oauth2client.service_account import ServiceAccountCredentials

import httplib2

import time

SCOPES = ["https://www.googleapis.com/auth/indexing"]

ENDPOINT = "https://indexing.googleapis.com/v3/urlNotifications:publish"

# Write path of json key file you got from GCP console.

JSON_KEY_FILE = "sandbox-aaaaaaaaaaaa.json"

credentials = ServiceAccountCredentials.from_json_keyfile_name(JSON_KEY_FILE, scopes=SCOPES)

http = credentials.authorize(httplib2.Http())

# Define contents here as a JSON string.

# This example shows a simple update request.

# Other types of requests are described in the next step.

# Write the website urls you want Google crawl

urls = [

"https://io.traffine.com/id/articles/minikube",

"https://io.traffine.com/id/articles/kubectl",

]

for url in urls:

content = """{

"url": "{url}",

"type": "URL_UPDATED"

}""".replace('{url}', url)

response, content = http.request(ENDPOINT, method="POST", body=content)

if response.status == 200:

print(f"Succeeded. Status: {response.status} {url}")

else:

print(f"Failed. Status: {response.status} {url}")

print(content)

time.sleep(5)

After running the code, the following output will be printed:

Succeeded. Status: 200 https://io.traffine.com/id/articles/minikube

Succeeded. Status: 200 https://io.traffine.com/id/articles/kubectl

After sending the requests, the respective URLs will be crawled within a maximum of about 2 minutes. In some cases, it can be as quick as a few seconds.

References