Building an AWS Environment for Hosting dbt Docs using Terraform

In this article, I will introduce the method of constructing an AWS environment for hosting dbt docs using Terraform. The goal is to make the dbt documents uploaded to S3 accessible through CloudFront. Additionally, I will implement Basic authentication on CloudFront using Lambda@Edge.

Terraform Code

You can find the Terraform code in the following GitHub repository:

Directory Structure

The directory structure is as follows:

.

├── backend.tf

├── cloudfront.tf

├── iam.tf

├── lambda.tf

├── lambda_edge

│ └── index.py

├── lambda_edge_archive

├── provider.tf

├── s3.tf

└── variables.tf

Construction Steps

We will proceed with building an environment for hosting dbt Docs on AWS in the following order:

- Write the Lambda@Edge function for Basic authentication.

- Build the infrastructure with Terraform.

Writing the Lambda@Edge Function

The code for the Lambda@Edge function, responsible for Basic authentication, is written in `index.py as shown below:

"""

Lambda@Edge for authentication

"""

import base64

def authenticate(user, password):

return user == "admin" and password == "passW0rd"

def handler(event, context):

request = event["Records"][0]["cf"]["request"]

headers = request["headers"]

error_response = {

"status": "401",

"statusDescription": "Unauthorized",

"body": "Authentication Failed",

"headers": {

"www-authenticate": [

{

"key": "WWW-Authenticate",

"value": 'Basic realm="Basic Authentication"',

}

]

},

}

if "authorization" not in headers:

return error_response

try:

auth_values = headers["authorization"][0]["value"].split(" ")

auth = base64.b64decode(auth_values[1]).decode().split(":")

(user, password) = (auth[0], auth[1])

return request if authenticate(user, password) else error_response

except Exception:

return error_response

Building the Infrastructure with Terraform

We will use Terraform to build the necessary infrastructure.

Writing the Source Code

Write the Terraform code to create the infrastructure. You can refer to the source code in the following repository:

Here, I will explain only the key points.

Multi-Region Setup

The provider.tf file contains the AWS provider configuration. Since we are using Lambda@Edge, the Lambda function must be deployed to the Virginia region (us-east-1). Therefore, we define the provider for the us-east-1 region and reference it during Lambda definition.

provider "aws" {

region = "ap-northeast-1"

default_tags {

tags = {

Env = "dev"

App = "my-app"

ManagedBy = "Terraform"

}

}

}

provider "aws" {

alias = "virginia"

region = "us-east-1"

default_tags {

tags = {

Env = "dev"

App = "my-app"

ManagedBy = "Terraform"

}

}

}

resource "aws_lambda_function" "cloudfront_auth" {

provider = aws.virginia

.

.

.

}

Lambda Zipping

Using the archive_file data source in lambda.tf, you can generate a Zip file of the specified directory.

data "archive_file" "function_source" {

type = "zip"

source_dir = "lambda_edge"

output_path = "lambda_edge_archive/function.zip"

}

The generated Zip file is referenced during Lambda definition.

resource "aws_lambda_function" "cloudfront_auth" {

.

.

.

filename = data.archive_file.function_source.output_path

source_code_hash = data.archive_file.function_source.output_base64sha256

}

Lambda Versioning

CloudFront requires specifying the version of the Lambda function. For information on Lambda versioning, please refer to the following article:

By setting publish=true during Lambda definition, versioning will be enabled.

resource "aws_lambda_function" "cloudfront_auth" {

.

.

.

publish = true

}

CloudFront references the specified Lambda version.

resource "aws_cloudfront_distribution" "main" {

.

.

.

lambda_function_association {

.

.

lambda_arn = aws_lambda_function.cloudfront_auth.qualified_arn

}

}

Terraform Apply

Execute terraform apply to build the environment.

Storing dbt Docs in S3

In the dbt project directory, upload the dbt documents to S3 using the following command:

$ dbt docs generate

$ aws s3 cp target/manifest.json s3://<s3 bucket name created by terraform>/

$ aws s3 cp target/run_results.json s3://<s3 bucket name created by terraform>/

$ aws s3 cp target/index.html s3://<s3 bucket name created by terraform>/

$ aws s3 cp target/catalog.json s3://<s3 bucket name created by terraform>/

Document Verification

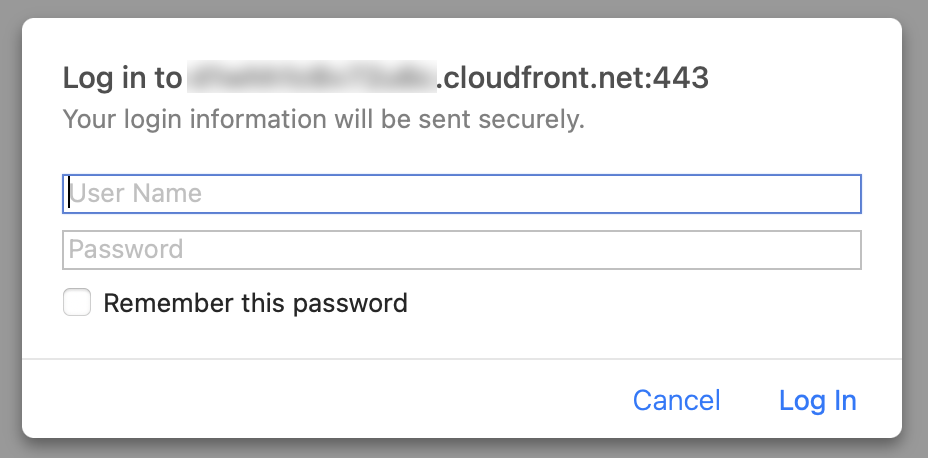

Accessing the CloudFront distribution domain will show that Basic authentication is enforced.

After authentication, you will be able to view the documents.

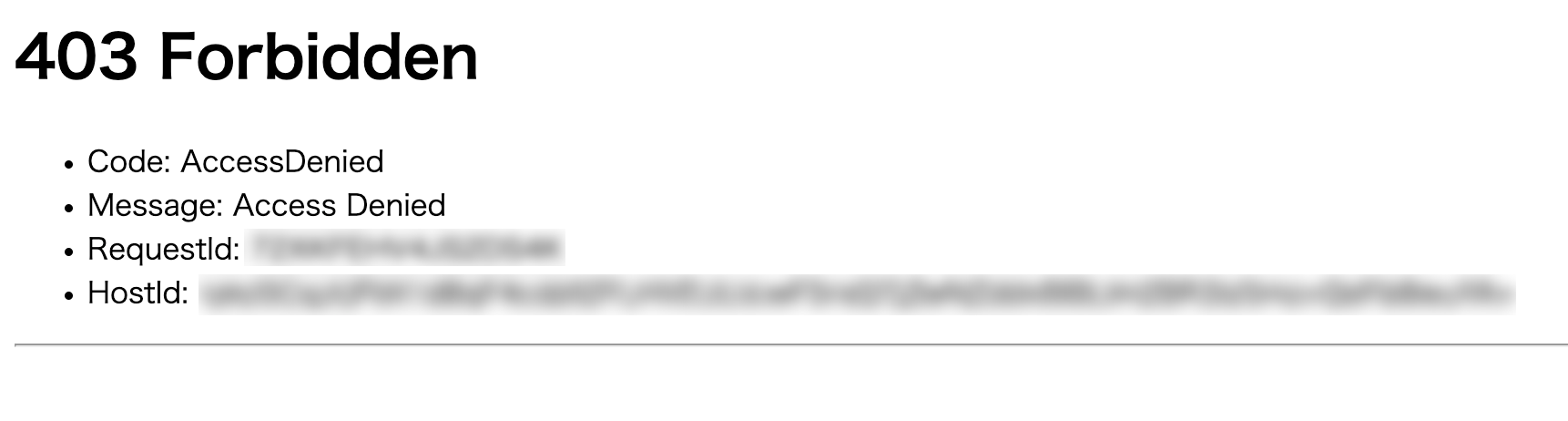

Additionally, accessing S3 directly will result in denial.